A Practical Guide to Data Warehouse Data Modeling

Data warehouse data modeling is the architectural blueprint for your analytics infrastructure. It’s the disciplined process of structuring disparate data sources into a coherent framework optimized for fast, reliable querying and reporting. Executed correctly, it enables business intelligence and AI. Done poorly, it creates an expensive and unusable data swamp.

Why Data Warehouse Modeling Is a Strategic Imperative

Building an analytics platform without a data model is like constructing a skyscraper without an architectural plan. It leads to predictable and costly failures: slow queries, inconsistent metrics, and a complete lack of trust in the data from business stakeholders. The entire multi-million dollar investment is jeopardized because the foundation is unsound.

A data model isn’t just a technical artifact; it’s a strategic asset that translates raw, complex data into a logical structure that business teams can understand and leverage.

The Business Case for a Solid Model

A well-designed model provides a clear, logical framework for business teams to interact with data, delivering tangible benefits:

- Optimized Query Performance: By pre-joining and organizing data for analytical workloads, a robust model drastically reduces the time required to query large datasets.

- A Single Source of Truth: A governed model enforces consistent business definitions. Metrics like “customer count” or “monthly recurring revenue” are calculated uniformly across all departments.

- Systematic Scalability: The model provides a roadmap for integrating new data sources or business units without disrupting existing reports and analytical pipelines.

A strong data model acts as the common language between business and technology teams. It ensures that when a sales leader requests “quarterly revenue,” the resulting report aligns perfectly with the CFO’s definition, eliminating ambiguity and fostering trust.

Modern Platforms Amplify the Need for Modeling

Cloud data platforms like Snowflake and Databricks offer immense computational power, but they do not eliminate the need for sound data modeling. In fact, their capabilities make thoughtful design even more critical. These platforms act as performance multipliers; a well-structured model allows them to execute queries at speeds previously unimaginable. Our guide on creating a data warehouse details the foundational steps where modeling plays this pivotal role.

The data warehousing market’s growth underscores this reality. Valued at $33.76 billion in 2025, it is projected to reach $37.73 billion in 2026 and an estimated $69.64 billion by 2029. This expansion is driven by the necessity of advanced modeling to manage massive data volumes and power the next generation of AI applications. You can find more insights about the future of data warehousing on baytechconsulting.com.

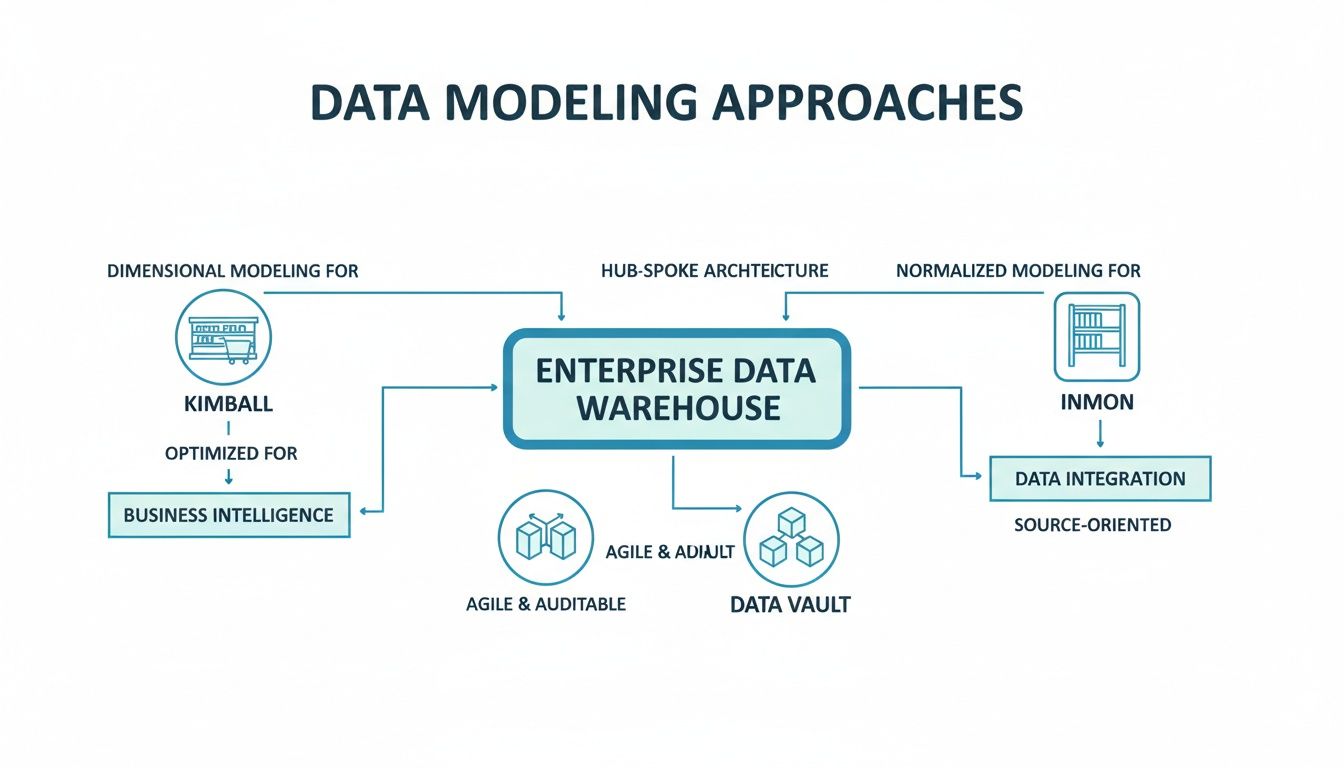

Comparing The Three Core Data Modeling Methodologies

Choosing a data modeling methodology is a foundational architectural decision. The three dominant approaches—Dimensional Modeling, Normalized Form, and Data Vault—offer different trade-offs between usability, integrity, and agility. Each provides a distinct philosophy for structuring data for analysis.

Let’s dissect each approach.

Dimensional Modeling (Kimball)

Developed by Ralph Kimball, Dimensional Modeling is optimized for end-user accessibility and query performance. Its primary goal is to make data intuitive for business analysts to consume.

The model is organized into two primary components:

- Fact Tables: These contain the quantitative measurements of a business process, such as sales amounts, order quantities, or transaction logs. They are typically narrow and very long, often containing billions of rows.

- Dimension Tables: These provide the descriptive context for the facts, answering the “who, what, where, when, and why.” Dimensions include entities like customers, products, locations, and dates.

The most common implementation is the star schema, where a central fact table connects directly to its corresponding dimension tables. This denormalized structure minimizes the number of joins required for a query, resulting in fast performance for BI tools and dashboards. It is fundamentally business-driven, built around specific processes like order management or inventory control.

Normalized Form (Inmon)

Championed by Bill Inmon, the Normalized Form (specifically 3rd Normal Form or 3NF) treats the data warehouse as the centralized, integrated source of truth for the enterprise. The primary objective is to eliminate data redundancy and ensure maximum data integrity.

This approach resembles a traditional transactional database structure, where data is broken down into many distinct tables, each representing a single entity.

- Core Principle: Minimize data duplication by storing each piece of information in one and only one place.

- Strengths: Unmatched data integrity and consistency across the enterprise. Updates are clean, and data anomalies are less likely.

- Drawback: The highly normalized structure makes direct querying complex and slow. Answering a business question often requires joining numerous tables. Consequently, analysts typically query purpose-built data marts (often dimensional models) that are sourced from this central repository.

Data Vault (Linstedt)

Developed by Dan Linstedt, Data Vault is a hybrid methodology designed for agility, scalability, and auditability in modern data environments. It excels at integrating data from numerous, disparate source systems without requiring extensive re-engineering.

The model is composed of three core components:

- Hubs: These store the unique business keys (e.g.,

CustomerID,ProductSKU), representing core business entities. Hubs contain minimal descriptive data and are stable over time. - Links: These establish the relationships or transactions between Hubs. A Link connects two or more Hubs, capturing an event like a purchase that involves a customer and a product.

- Satellites: These contain all the descriptive attributes of the Hubs and Links. Crucially, Satellites are time-stamped, providing a complete, auditable historical record of every change to an attribute.

This structure allows for parallel data loading and makes it straightforward to add new data sources without disrupting the existing model. It also provides a built-in audit trail, a critical feature for regulated industries. For a deeper dive into these approaches, you can explore various data modeling techniques in our comprehensive guide.

Comparing Key Trade-Offs

The optimal model depends on your specific organizational priorities—balancing speed, integrity, and flexibility.

Data Modeling Methodology Comparison

| Attribute | Dimensional Modeling (Kimball) | Normalized Form (Inmon) | Data Vault |

|---|---|---|---|

| Primary Goal | Usability and query performance for business analytics. | Enterprise-wide data consistency and integrity. | Agility, auditability, and scalability for raw data. |

| Structure | Star/Snowflake schemas with denormalized dimensions. | Highly normalized (3NF) relational tables. | Hubs, Links, and Satellites to separate keys and attributes. |

| Ideal Use Case | Departmental data marts, BI dashboards, reporting. | Central enterprise data warehouse (EDW), single source of truth. | Complex enterprises with many source systems, agile environments. |

| Query Speed | Very Fast. Optimized for end-user analysis. | Slow. Requires many joins for complex queries. | Moderate. Not optimized for direct end-user querying; serves as a source for data marts. |

| Flexibility | Moderate. Adding new dimensions can be complex. | Low. Changes to the core model are difficult and costly. | Very High. Easily accommodates new data sources. |

| Implementation | Fast. Models are built incrementally, process by process. | Slow. Requires significant upfront design and planning. | Moderate. Can be loaded in parallel, enabling faster ingestion. |

There is no single “best” answer. A star schema is a workhorse for BI, offering excellent query performance. Data Vault is superior for complex enterprises that require an auditable raw data layer, even though its storage footprint can be up to 2x that of other models due to its historical tracking.

The practical impact is significant. A telecom provider, for instance, can leverage five years of historical data in a well-modeled warehouse to build churn prediction models with 85% accuracy, enabling proactive customer retention campaigns. You can explore a deeper analysis of AI’s impact on cloud data warehouses on firebolt.io.

A Practical, Step-by-Step Data Modeling Workflow

Building an effective data warehouse model is a structured, repeatable process that translates business requirements into a high-performance data asset. This workflow is broken down into four logical stages.

Stage 1: Business Requirements Discovery

This is the most critical stage. A model built without deep business input is guaranteed to fail. The objective is to move beyond vague requests like “we need a sales dashboard” to understand the specific questions stakeholders need to answer.

This involves direct engagement with business users—sales leaders, marketing managers, operations analysts—to understand their objectives and analytical needs. Key questions include:

- What specific metrics define success for your department?

- How do you define key entities like “new customer” or “active product”?

- What are the key performance indicators (KPIs) you are accountable for?

- What comparisons do you regularly make (e.g., this quarter vs. last quarter, this region vs. another)?

The answers form the foundation of the model, defining the necessary facts, dimensions, and calculations.

Stage 2: Conceptual and Logical Design

With requirements defined, the next step is to translate them into a conceptual and then a logical data model. This begins by identifying the core business processes (e.g., order fulfillment, lead generation) and the key entities involved (customers, products, employees).

The most critical decision in this stage is defining the grain of your fact tables. The grain is a precise statement declaring what a single row in a fact table represents (e.g., one line item on a sales order, a daily summary of sales per store).

Establishing a clear, unambiguous grain is the cornerstone of a dimensional model. Ambiguity in the grain leads to inconsistent metrics, incorrect query results, and an immediate loss of user trust in the data.

Once the grain is set, you can build out the surrounding dimensions and their attributes. This logical design phase should be platform-agnostic; you are defining the structure and relationships, not writing SQL code. This is also the point where the overarching modeling methodology (Kimball, Inmon, or Data Vault) is chosen based on the project’s strategic goals.

Stage 3: Physical Implementation

In this stage, the logical design is implemented on the chosen cloud platform. This involves writing CREATE TABLE statements and applying platform-specific optimizations that are critical for performance.

The physical implementation will differ significantly based on the platform:

- On Snowflake: Defining clustering keys on large fact tables is essential to co-locate related data and accelerate range-based queries.

- On Databricks: Z-Ordering on multiple columns is used to achieve a similar data co-location effect within the Delta Lake storage format.

- On Google BigQuery: Partitioning and clustering tables are the primary mechanisms for controlling costs and minimizing data scanned per query.

This is also when foreign key constraints are defined (even if unenforced, they serve as documentation), data types are precisely specified, and descriptive comments are added to tables and columns. These details are essential for creating a usable and maintainable asset.

Stage 4: Iteration and Governance

A data model is not a static project; it is a living product that must evolve with the business. This stage involves a continuous cycle of iteration and governance to manage changes as new data sources emerge and business requirements shift.

A robust governance framework is necessary to prevent this evolution from becoming chaotic. Key components include:

- Version Control: Using Git to manage all SQL DDL scripts.

- Data Dictionary: Maintaining clear, accessible, and up-to-date documentation for every table and column.

- Impact Analysis: Establishing a formal process for assessing how a proposed change will affect downstream reports and dashboards before implementation.

Treating the model as an evolving product ensures the data warehouse remains aligned with business strategy.

Modeling for Modern Cloud Data Platforms

The architecture of cloud platforms like Snowflake, Databricks, and BigQuery has fundamentally changed the rules of data modeling. The separation of storage and compute has upended traditional cost-performance assumptions, requiring a new approach to model design.

In legacy on-premises systems, storage and compute were tightly coupled. Storing large, denormalized datasets was expensive, and complex queries could monopolize system resources. Today, cloud storage is inexpensive, and scalable compute clusters can be provisioned on-demand. This architectural shift redefines the entire data warehouse data modeling playbook.

The New Rules of Denormalization

For decades, normalization was the default best practice, aimed at minimizing data redundancy to save storage costs. Denormalization was used sparingly due to its storage penalty.

In the cloud, this logic is inverted. The cost of performing a complex join across multiple tables often exceeds the cost of storing redundant data. Since compute is frequently billed by the second, query simplification directly reduces operational costs. This makes a strong case for building wider, denormalized tables. Creating a single, wide table containing all customer, order, and product details—once considered an anti-pattern—is now often a sound strategy for powering BI dashboards because it minimizes joins, accelerates queries, and lowers compute costs.

In a modern cloud data warehouse, optimization priorities shift from storage footprint to query time and compute cost. Denormalization is a key technique for reducing the compute resources required for analysis, making it a powerful tool for cost and performance management.

Modeling for the Lakehouse Architecture

The Lakehouse paradigm, prominent in platforms like Databricks, combines the scalability of a data lake with the structure of a data warehouse. This is typically implemented using a multi-layered “medallion” architecture (Bronze, Silver, Gold), where data is progressively refined.

Modeling strategies must align with this layered approach:

- Bronze Layer (Raw): This is the landing zone for data in its original format, often as semi-structured JSON or Parquet files. No modeling occurs here; the goal is to capture a complete, untransformed historical record.

- Silver Layer (Cleansed): Data is parsed, validated, and structured. Nested JSON fields may be extracted into columns, and basic data quality rules are applied. This layer often resembles a normalized model.

- Gold Layer (Aggregated): This is the presentation layer for business users. Data is heavily denormalized and aggregated into clean star schemas or wide, flat tables optimized for specific analytical use cases, such as sales reporting or marketing analytics.

This layered model provides flexibility, allowing data scientists to work with semi-raw data in the Silver layer while business analysts benefit from high-performance, query-friendly models in the Gold layer.

Handling Semi-Structured Data Natively

Cloud platforms excel at handling semi-structured data like JSON without requiring a rigid, upfront schema definition. Instead of shredding a JSON object into multiple relational tables, platforms like Snowflake allow you to ingest the entire document into a single VARIANT column and query it directly using dot notation.

This simplifies ingestion pipelines and makes the model resilient to changes in the source schema. A common best practice is to land raw JSON in a VARIANT column and then create materialized views that flatten frequently used fields into a structured table for end-users. This approach combines the flexibility of schema-on-read with the performance of a traditional structured model.

Advanced Topics and Common Pitfalls to Avoid

Moving from theory to practice introduces real-world complexities that can derail a project. Two areas consistently cause issues: managing historical data changes and falling into avoidable process-related traps. Mastering these topics separates a functional data warehouse from a strategic one.

Managing Historical Data with SCDs

Handling Slowly Changing Dimensions (SCDs) is a critical challenge. Attributes like a customer’s address, a product’s category, or a sales territory change over time. How you track these changes directly impacts the accuracy of historical reporting. Simply overwriting old values erases history.

The appropriate SCD technique depends on the analytical requirements of the business:

- Type 1 (Overwrite): The old value is replaced with the new one. This is suitable for correcting errors but provides no historical tracking.

- Type 2 (Add New Row): This is the most common method for historical analysis. When an attribute changes, a new row is created for that dimension, and effective date columns or a current flag are used to identify the active version.

- Type 3 (Add New Column): A “previous value” column is added to the dimension table. This is useful for direct before-and-after comparisons but is not scalable for attributes that change frequently.

The choice is critical. Using a Type 1 overwrite for a salesperson’s territory change makes it impossible to accurately report on their performance in their old territory. A Type 2 approach preserves this history, which is essential for any meaningful trend analysis.

Common and Costly Modeling Mistakes

Process failures can sink a project even with a technically sound model. The most dangerous pitfalls are often related to people and planning, not code.

Poor data quality is a recurring problem. Issues like duplicate records or missing values can lead to over 30% inaccuracy in analyses, eroding user trust. This is exacerbated by poor historical tracking, where overwrites destroy the ability to analyze trends. You can learn more about the challenges in the data warehousing market from gminsights.com.

Modeling in Isolation from Business Users: This is the cardinal sin of data warehousing. Building a model without continuous feedback from the people who will use it results in a technically sound but functionally useless asset.

Other common traps include:

- Inconsistent Naming Conventions: Using

Cust_IDin one table andCustomerKeyin another creates confusion and slows down development. A clear, documented naming standard is non-negotiable. - Premature Optimization: Do not spend weeks debating the optimal clustering key for a table before the logical model is finalized. Define the business relationships first, then apply physical optimizations based on actual query patterns.

- Ignoring Data Governance: Without clear ownership and processes for defining metrics (e.g., “active customer”) and managing schema changes, even the best-designed model will decay into chaos over time.

By anticipating these challenges, you can steer your data warehouse data modeling project toward a successful outcome that delivers sustained business value.

How to Select the Right Data Modeling Partner

Choosing an implementation partner is as critical as selecting the right methodology. A strong partner delivers a scalable, future-proof asset; a poor one leaves you with a brittle, expensive, and unmaintainable system. This decision requires a rigorous evaluation process.

The goal is to find a team that thinks like an architect, understanding the trade-offs between different data warehouse data modeling approaches and connecting them to your long-term business objectives.

Vetting Technical and Platform Expertise

A partner’s technical competence is non-negotiable. They must have proven, hands-on experience with both modeling theory and the specific cloud platforms you use. Vague claims of expertise are a red flag.

Ask direct questions to assess their depth of knowledge:

- Methodology Fluency: Can they clearly articulate why they would choose Kimball over Inmon or Data Vault for a given business problem? Ask for client examples illustrating these decisions.

- Platform-Specific Knowledge: How do they optimize models for Snowflake’s clustering keys versus Databricks’ Z-Ordering? What are their best practices for handling semi-structured data in BigQuery?

- Future-Proofing for AI/ML: How do their models support feature engineering for machine learning? Ask them to demonstrate how a model they built integrates with an MLOps pipeline.

A great partner won’t just build what you ask for. They will challenge your assumptions and bring forward-thinking design patterns that anticipate future needs, ensuring the model you build today doesn’t become a bottleneck tomorrow.

Assessing Governance and Delivery Process

Technical skill is insufficient without a disciplined process. A partner’s approach to governance, data quality, and project management reveals their ability to deliver a sustainable solution. Look for a mature, repeatable methodology.

Dig into their operational discipline. A guide to data engineering consulting services can provide a framework for comparing vendors.

Ensure their process includes:

- Data Governance Integration: How are data quality checks and metadata management built into their development workflow, not added as an afterthought?

- Collaborative Workflow: What is their process for gathering requirements and validating designs with your business teams?

- Documentation and Handover: Do they deliver a comprehensive data dictionary, lineage maps, and operational runbooks at project completion? You must be able to operate the system independently.

You are hiring a partner to de-risk a major investment. Their ability to demonstrate a rigorous, transparent, and forward-looking process is the best indicator of a successful outcome.

Answering Your Data Modeling Questions

Even with a solid plan, common questions arise during a data warehouse data modeling project. Addressing them directly ensures team alignment and project momentum.

When to Use a Star vs. Snowflake Schema?

This choice involves a trade-off between query performance and storage/maintenance efficiency.

A star schema is optimized for query speed. Its dimension tables are denormalized (wide, with some data repetition), which minimizes the number of joins needed to answer a query. This makes it simple for BI tools to consume and is almost always the preferred choice for end-user-facing analytics.

A snowflake schema normalizes the dimension tables, breaking them into smaller, related tables. This reduces data redundancy and simplifies maintenance, but it increases query complexity and can slow performance due to the additional joins required.

The decision comes down to priorities: prioritize raw query speed (star) or streamlined maintenance and storage efficiency (snowflake).

With Data Lakes, Is Data Modeling Even Relevant Anymore?

Yes, it is more critical than ever. A data lake excels at storing vast quantities of raw, unstructured data at a low cost. However, it is not suitable for direct business analysis. Without structure, a data lake becomes a “data swamp”—a repository where data is inaccessible and insights cannot be extracted.

Data modeling is the process that transforms the raw data in the lake into a clean, governed, and high-performance asset for the business. This often involves building a well-structured presentation layer (e.g., the “Gold” layer in a medallion architecture) on top of the raw data, typically using a star schema. This structured layer is what powers dashboards, reports, and reliable AI/ML models.

The most valuable skill a data modeler can have today isn’t just knowing the difference between a hub and a link. It’s the ability to be a translator—to listen to messy, sometimes vague business problems and convert them into a logical data structure that delivers clear, trustworthy answers.

Without that translation, a model can be technically correct but practically useless.

Ready to find a partner who can translate your business needs into a high-performance data model? At DataEngineeringCompanies.com, we provide expert rankings and practical tools to help you select the right data engineering consultancy with confidence. Find your ideal data partner today!

Top Data Engineering Partners

Vetted experts who can help you implement what you just read.

Related Analysis

A Practical Guide to Modern Data Warehouse Modeling

Master modern data warehouse modeling with this practical guide. Explore Kimball, Inmon, and Data Vault to design scalable models for today's cloud platforms.

A Practical Guide to Data Modeling Techniques for Modern Data Platforms

Explore data modeling techniques and practical guidance across relational, dimensional, and Data Vault models for Snowflake, Databricks, and more.

A Practical Guide to Building a Data Warehouse in 2025

A practical guide to building data warehouse architecture, data modeling, ETL/ELT, governance, and vendor choices for modern data teams.