A Practical Guide to Building a Data Warehouse in 2025

A data warehouse operationalizes raw data from disparate business systems into a unified, reliable source for analytics. It’s the core infrastructure powering business intelligence, real-time reporting, and AI/ML initiatives. For any organization serious about data-driven decision-making, building a robust data warehouse is not optional; it’s a foundational requirement.

Why Building a Data Warehouse Is a Strategic Imperative

A modern data warehouse is not a passive data repository. It is an active, operational hub that provides the clean, structured data necessary for high-value analytical workloads, including predictive modeling and machine learning applications.

The shift to cloud platforms like Snowflake, Databricks, and Google BigQuery has fundamentally altered the economics of data warehousing. What was once a capital-intensive infrastructure project is now an operational expenditure. This consumption-based model provides access to enterprise-grade analytics capabilities without prohibitive upfront investment.

The Business Case for a Modern Data Warehouse

The strategic value is reflected in market trends. The global data warehousing market, valued at approximately USD 6.988 billion in 2024, is projected to reach USD 32.89 billion by 2035. This growth is driven by the escalating demand for analytics platforms that can deliver a competitive advantage. You can analyze the drivers behind the data warehousing market’s rapid expansion for a deeper understanding.

This guide provides a direct, technical roadmap for building a data platform that delivers measurable business value, covering architecture, implementation, and operational management.

The function of a data warehouse is to consolidate disparate data sources for contextual analysis. Raw, isolated datasets have limited utility. Value is unlocked when data is connected to business processes and used to answer specific, critical questions.

Choosing Your Foundational Data Architecture

Before any implementation, a clear architectural blueprint is required. This decision dictates performance, cost, and agility for the system’s entire lifecycle. It is a strategic choice that must be directly aligned with business objectives.

The initial step is to translate business goals into specific data requirements. For example, the objective “improve customer personalization” is not actionable for an engineering team. Stakeholder interviews must probe for specifics:

- What specific customer interactions require personalization (e.g., website recommendations, email campaigns, in-app notifications)?

- What data entities define a “customer” (e.g., CRM records, web behavior, transaction history, support tickets)?

- Where does this source data currently reside (e.g., Salesforce, Google Analytics, production databases, third-party systems)?

This process transforms abstract goals into a concrete map of necessary data sources and desired analytical outputs. Only then can you effectively evaluate the dominant architectural patterns of 2025.

Cloud Data Warehouse vs. Lakehouse vs. Hybrid

Three primary architectural models exist, each with distinct trade-offs in complexity, data handling, and cost.

A Cloud Data Warehouse, exemplified by platforms like Snowflake or Google BigQuery, excels at processing structured and semi-structured data. It is engineered for high-performance SQL queries, making it the standard for business intelligence reporting, executive dashboards, and complex analytical queries.

The Data Lakehouse, championed by platforms such as Databricks, merges the low-cost, flexible storage of a data lake with the data management features of a warehouse. It is designed to handle all data types—structured, semi-structured, and unstructured (images, text, logs). This makes it suitable for machine learning and advanced data science workloads that coexist with standard BI.

The Hybrid Model is often the most pragmatic solution. A common implementation uses a cloud data warehouse as the primary engine for BI, fed with curated data from a data lake. Concurrently, data science teams can work with raw, unstructured data directly in the lake environment.

A common error is selecting an architecture based on market hype rather than documented requirements. A lakehouse may seem forward-thinking, but if 95% of use cases involve standard BI on structured data, a traditional cloud data warehouse will likely be more cost-effective and simpler to manage.

This table provides a comparative analysis to align your requirements with the appropriate model.

Comparing Data Warehouse, Lakehouse, and Hybrid Models

| Attribute | Cloud Data Warehouse (e.g., Snowflake) | Data Lakehouse (e.g., Databricks) | Hybrid Model |

|---|---|---|---|

| Primary Use Case | Business Intelligence, SQL analytics, enterprise reporting. | AI/ML, data science, streaming analytics, and BI. | Supports both BI and advanced analytics by integrating a data lake and a data warehouse. |

| Data Types | Optimized for structured and semi-structured data (JSON, Avro). | Handles structured, semi-structured, and unstructured data. | Manages all data types by storing raw data in the lake and structured data in the warehouse. |

| Performance | High query performance for structured data analytics. | Good performance for BI, but optimized for large-scale data processing and ML. | Performance is optimized for each platform’s strength—fast SQL in the warehouse, scalable processing in the lake. |

| Cost Structure | Generally higher storage costs but optimized compute. Pay-per-query models. | Lower storage costs (uses object storage like S3), with compute based on cluster usage. | A blended cost model that requires careful management across both platforms to avoid budget overruns. |

| Flexibility | Less flexible with unstructured data; schemas are more rigid. | Highly flexible; supports diverse workloads from SQL to Python/R on the same data. | Offers high flexibility but introduces complexity in data movement and governance between environments. |

The optimal architecture achieves business objectives without incurring unnecessary complexity or cost. For a deeper technical comparison of the leading platforms, analyze the differences between Snowflake and Databricks to determine the best fit for your team’s skillset and long-term roadmap.

Designing Your Data Ingestion and Transformation Pipelines

With a defined architecture, the next critical phase is designing data pipelines to ingest and process data. The success of the warehouse depends on efficient, reliable pathways to move data from source systems to an analysis-ready state.

The primary architectural decision here is the sequence of Extraction, Transformation, and Loading.

The Big Shift from ETL to ELT

Historically, ETL (Extract, Transform, Load) was the standard. Data was extracted from a source, transformed in a separate processing engine, and the resulting structured data was loaded into the warehouse. This was necessary due to the limited and expensive compute resources of on-premise data warehouses.

Modern cloud platforms have enabled a paradigm shift to ELT (Extract, Load, Transform). Raw data is extracted and loaded directly into the cloud data warehouse. Transformations are then executed within the warehouse, leveraging its scalable compute engine.

The advantages of the ELT approach are significant:

- Speed and Flexibility: Ingesting raw data is fast. Data can be transformed and remodeled later for various analytical needs without requiring re-ingestion from the source.

- Full Data Fidelity: An unaltered copy of the source data is preserved. This is invaluable for debugging, re-running transformations, or exploring new use cases.

- Scalability: Cloud data warehouses are designed for large-scale SQL transformations on massive datasets.

In practice, ELT has become the default architecture for modern data warehouse implementations. It decouples ingestion from modeling, allowing data engineers to focus on reliable data movement while analytics engineers focus on shaping data for business consumption.

Modern Data Ingestion Methods

With an ELT pattern established, the next consideration is the ingestion mechanism. While batch processing remains relevant, demand for fresher data has prioritized more dynamic methods.

Change Data Capture (CDC) is highly effective for relational databases (e.g., PostgreSQL, MySQL). Instead of full table extractions, CDC tools read the database’s transaction log to capture inserts, updates, and deletes in near-real-time. This provides a continuous stream of changes with minimal performance impact on the source system.

For SaaS applications like Salesforce or HubSpot, API connectors are the standard. Tools such as Fivetran, Airbyte, or Stitch offer pre-built connectors that manage API complexities like authentication, pagination, and rate limiting. This significantly reduces the engineering effort required to build and maintain custom integration scripts. To see these components in action, review these data pipeline architecture examples.

Choosing the Right Data Modeling Technique

Once raw data is loaded, it must be transformed into a structured, analytics-ready format through data modeling. Two methodologies dominate this space.

The Kimball method (dimensional modeling) is the most common approach for BI use cases. It organizes data into “fact” and “dimension” tables.

- Fact tables contain quantitative metrics from business events (e.g.,

sales_amount,page_views). - Dimension tables provide the descriptive context for those events—the “who, what, where, when, why” (e.g.,

dim_customer,dim_product,dim_date).

This “star schema” structure is intuitive for business users and optimized for the high-performance queries that power BI tools.

Alternatively, Data Vault is a methodology designed for large-scale, enterprise data integration and auditability. It structures data into Hubs (business keys), Links (relationships), and Satellites (descriptive attributes). Data Vault excels at integrating data from numerous source systems and provides a complete, auditable history. However, its structure is more complex and typically requires an additional transformation layer to create user-friendly dimensional models for BI consumption.

For most organizations, the Kimball method is the pragmatic starting point, as it directly addresses the primary goal of making data accessible and understandable for business analysis.

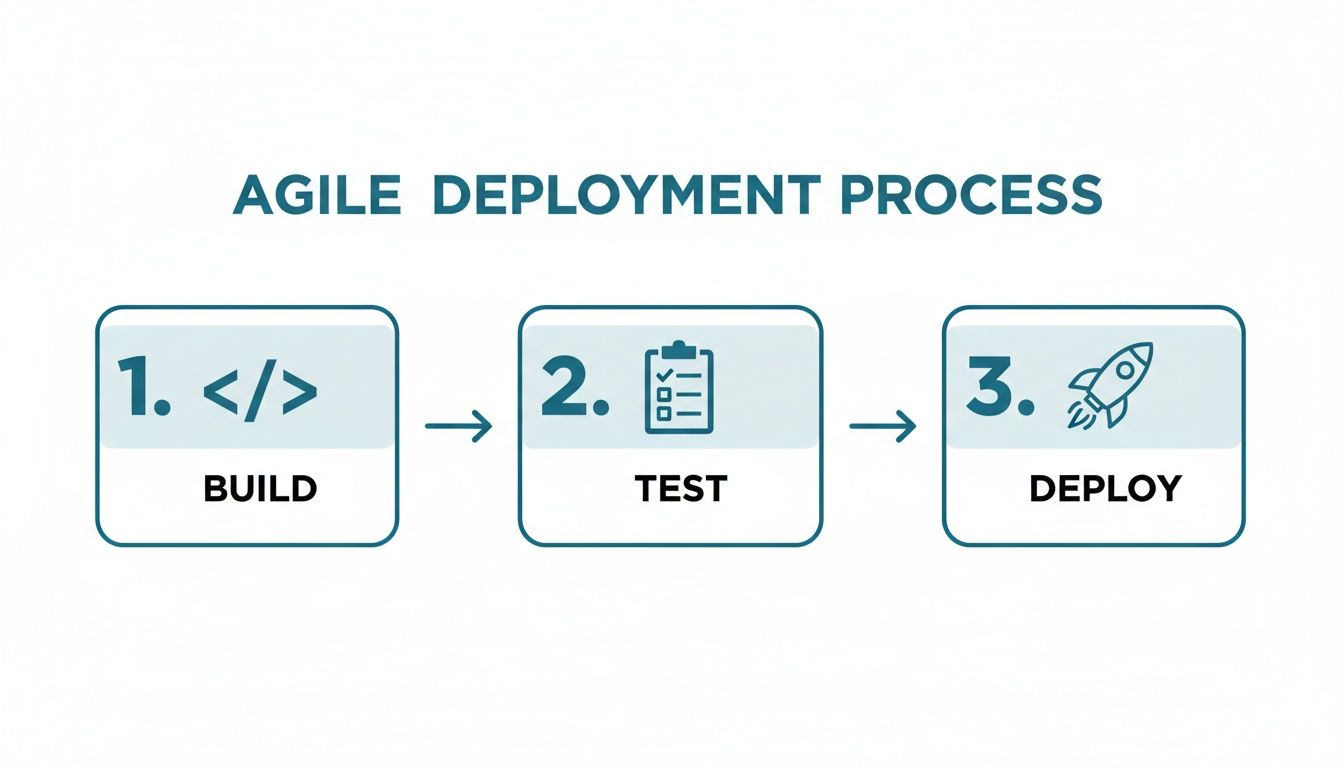

Agile Build, Test, and Deploy: From Code to Production

Monolithic, “big bang” data warehouse deployments are obsolete and carry unacceptably high risk. The modern standard is an agile, iterative methodology. This approach breaks the project into small, manageable units of work, delivering value in short sprints while maintaining continuous feedback between engineers and business stakeholders.

The prerequisite for agile development is the establishment of isolated environments: development, testing/staging, and production. This is a non-negotiable control that prevents development activities from impacting production systems. Each environment requires its own credentials, compute resources, and data access policies, creating a structured promotion path for code.

Adopting an iterative build process is the single most effective way to de-risk a data warehouse project. It replaces a single, high-stakes failure point with numerous small, low-risk deployments. This enables early issue detection, builds stakeholder confidence, and ensures the final product solves real business problems.

A Layered Testing Strategy

A robust testing strategy is central to the build process and should encompass several layers.

Unit Testing: The First Line of Defense Unit tests validate the smallest components of transformation logic in isolation. For a SQL model calculating customer lifetime value (LTV), a unit test would supply a small, controlled dataset and assert that the output matches a pre-calculated, expected result. This practice isolates and identifies logic errors at the source.

Integration Testing: Verifying End-to-End Processes Integration tests confirm that entire data pipelines function correctly from source to destination. An integration test might trigger a data extraction from a source system like Salesforce, execute the full sequence of ELT jobs, and then query the final analytics tables to verify data integrity. These tests identify issues related to dependencies, permissions, and schema changes between systems.

User Acceptance Testing (UAT): The Final Sign-Off UAT is the final validation step. A new dashboard or dataset is provided to the business team for review and approval. Formalizing this as a mandatory sign-off process ensures that the delivered product meets business requirements and builds trust in the data.

Automating Deployments with CI/CD

Manual deployments are slow, error-prone, and incompatible with agile development. A CI/CD (Continuous Integration/Continuous Deployment) pipeline automates the entire testing and deployment workflow.

A typical CI/CD process is triggered when a developer commits code:

- The pipeline automatically executes all unit tests.

- If unit tests pass, the code is deployed to a staging environment where integration tests are run.

- Only after all tests pass is the code merged into the main branch, ready for production deployment.

This automation accelerates delivery and improves reliability. Advanced teams may implement strategies like blue-green deployments. New code is deployed to a parallel “green” production environment. After verification, live traffic is redirected to it. The previous “blue” environment serves as an immediate rollback target, enabling zero-downtime releases.

Managing Performance, Governance, and Cost

Deploying a data warehouse is the start, not the end. Ongoing operational management is critical for ensuring performance, security, and cost-effectiveness. A modern data platform is a dynamic system requiring continuous attention.

The shift to Data Warehouse as a Service (DWaaS) has significantly reduced costs compared to on-premise solutions, often by 50-70%. This has fueled market growth, with the global DWaaS market projected to expand from USD 9.79 billion in 2025 to USD 39.58 billion by 2032. North America currently holds a 40.20% market share. You can find more detail in this DWaaS market report on fortunebusinessinsights.com.

However, the pay-as-you-go model that drives these savings can lead to uncontrolled spending without diligent oversight. Managing a data warehouse requires the same iterative approach used in its construction.

This operational cycle of building, testing, and deploying improvements is continuous, even after the initial launch.

Performance Tuning and Optimization

Slow query performance directly translates to higher costs in a cloud environment by consuming excess compute credits. Proactive performance tuning is essential.

Begin by using the platform’s monitoring tools to identify the most resource-intensive queries. Once identified, apply targeted optimizations:

- Query Refactoring: Inefficient SQL is a common cause of poor performance. Analyze queries for suboptimal joins, full-table scans, or unnecessarily complex logic that can be simplified.

- Materialized Views: For dashboards that repeatedly run complex aggregations, use materialized views. These pre-calculate and store results, enabling near-instantaneous load times and reducing redundant compute.

- Cluster Right-Sizing: Avoid over-provisioning compute resources. Modern platforms allow for dynamic scaling. Automate the process of scaling clusters up for intensive workloads (e.g., nightly batch loads) and scaling down or pausing them during idle periods to eliminate spending on unused resources.

Implementing Robust Data Governance and Security

As the central source for analytics, the data warehouse must be secured. Effective data governance is not merely a compliance exercise; it is fundamental to building and maintaining user trust in the data.

A practical governance framework is built on key principles. The foundation is Role-Based Access Control (RBAC), which ensures that users can only access data relevant to their roles. For instance, a marketing analyst should be restricted from accessing sensitive HR salary data.

For enhanced security, implement data masking and tokenization. These techniques obscure or substitute sensitive data like PII (Personally Identifiable Information), particularly in non-production environments. This allows developers to work with realistic data structures without exposing confidential information.

The objective of governance is not to restrict data access, but to enable safe and appropriate access. A data catalog is a critical tool, providing a searchable inventory of data assets that helps users discover trusted, curated datasets.

A well-defined strategy is essential for success. For a detailed framework, consult our guide on data governance best practices.

Taking Control of Your Cloud Spend

Managing costs in a consumption-based model requires a shift from capital expenditure planning to active operational expense management.

First, establish visibility. Use your cloud provider’s cost management tools to analyze spending by team, project, or workload. This identifies where budget is being allocated and why.

Next, implement budget alerts. These automated notifications trigger when spending approaches a predefined threshold, preventing significant budget overruns.

Finally, consistently tie spending to business value. If a specific workload consumes significant resources, you must be able to demonstrate the measurable return it generates. This continuous analysis ensures the data warehouse operates as a value driver, not just a cost center.

Finding the Right Implementation Partner

A sound strategy is meaningless without effective execution. Building a modern data platform requires a specialized and often scarce skillset. Engaging a data engineering consultancy can provide the necessary expertise and experience from dozens of similar projects.

This decision is critical. You are not just procuring services; you are establishing a partnership that will shape your organization’s data capabilities. The right partner accelerates delivery, helps avoid costly mistakes, and ensures the final solution drives business value.

How to Evaluate Potential Partners

Look beyond marketing materials and focus on verifying practical experience. Do not just ask if they are proficient with Snowflake or Databricks. Request detailed case studies of projects with similar scope, scale, and industry context.

Industry-specific expertise is crucial. For example, retail and e-commerce constituted 21% of the data warehousing market in 2023, followed by banking and finance (BFSI) at 19%. These sectors have unique data challenges and stringent compliance requirements. A partner with relevant domain knowledge will not be learning on your time and budget. You can explore these sector-specific market trends on marketgrowthreports.com for more context.

A partner’s competence is revealed by their responses to detailed technical and process-oriented questions. Vague answers regarding project methodology, governance, or security protocols are significant red flags indicating a lack of experience.

Key Questions to Include in Your RFP

A structured Request for Proposal (RFP) facilitates an objective, apples-to-apples comparison of potential partners.

Essential questions to include are:

- Project Management: What is your team structure and communication cadence? Describe your agile development methodology in detail.

- Technical Depth: Detail your hands-on experience with our specific technology stack. What is your process for code reviews and automated testing?

- Governance and Security: What is your standard methodology for implementing role-based access control? Describe a past project where you handled sensitive data masking.

- Pricing and Contracts: Provide a detailed breakdown of your pricing model. What is included, and what are potential overage costs?

Be cautious of firms that propose a one-size-fits-all solution or offer pricing that seems unrealistically low. Look for transparency and a consultative approach. A strong partner acts as a strategic advisor, focused on building a lasting data capability for your business.

Answering the Tough Questions About Building a Data Warehouse

Several key practical questions consistently arise during the planning phase of a data warehouse project.

How Long Is This Really Going to Take?

The timeline is scope-dependent. A project for a single department with a few well-structured data sources might reach an initial go-live in 3-6 months. An enterprise-wide platform integrating numerous legacy systems is more realistically a 12-18 month endeavor.

The most common failure pattern is attempting a “big bang” release. The correct approach is iterative. Deliver a minimum viable product (MVP) that solves a single, high-impact business problem first. This demonstrates value quickly and builds momentum for subsequent phases.

The objective is not to deliver a complete system at once. It is to deliver a functional, valuable component to the business as quickly as possible to prove ROI and justify further investment.

What’s a Realistic Budget for a Data Warehouse Project?

The budget consists of three main components: technology licensing, implementation services, and internal personnel.

- Platform & Licensing Costs: Cloud platforms operate on a consumption model. A small team might spend a few thousand dollars per month, while large enterprises can easily exceed $50,000 monthly.

- Implementation Partner: Engaging a specialized firm for the initial build can range from $100,000 to over $500,000, depending on project scope and duration.

- Your Internal Team: Do not overlook the ongoing cost of your own data engineers, analysts, and project managers required to operate and derive value from the platform.

Can We Just Build This In-House?

Building in-house is feasible if your team possesses the requisite senior-level expertise in data architecture, pipeline development, cloud infrastructure, and data security.

If your team lacks prior experience with your chosen platform, such as Snowflake or Databricks, the learning curve is steep and can lead to architectural mistakes that are costly to remediate.

A hybrid approach is often most effective. Use an experienced partner to establish a solid architectural foundation and mentor your internal team. The partner builds the core infrastructure, enabling your team to take ownership and drive future development.

Selecting the right partner is a high-stakes decision that dictates the success of your data warehouse initiative. At DataEngineeringCompanies.com, we provide independent, data-driven rankings and resources to help you find a qualified consultancy. Explore our expert reviews and guides to make an informed choice.

Top Data Engineering Partners

Vetted experts who can help you implement what you just read.

Related Analysis

A Practical Guide to Data Warehouse Data Modeling

Learn modern data warehouse data modeling. This guide compares Dimensional, Inmon, and Data Vault methods for platforms like Snowflake and Databricks.

A Practical Guide to Creating a Data Warehouse That Delivers Business Value

A practical blueprint for creating data warehouse architecture, pipelines, modeling, and governance to drive insight.

dbt Implementation Partners: Who Can Tame Your DAG in 2026?

Choosing the right dbt Labs partner for your analytics engineering transformation. We compare Premier vs. Specialized boutique partners.