A Practical Guide to Cloud Data Integration for Modern Data Stacks

Cloud data integration is the systematic process of consolidating data from disparate sources into a central cloud-based repository. It’s the engineering discipline that creates automated, reliable data pipelines from operational systems like Salesforce into a target like a cloud data warehouse. Executed correctly, it creates a single source of truth—the non-negotiable foundation for analytics, business intelligence, and AI.

The Strategic Imperative for Centralized Cloud Data

Data originates in isolated systems: a CRM holds customer data, an ERP has financial records, and legacy databases contain operational metrics. Without integration, these systems remain information silos, making a holistic business view impossible. Cloud data integration replaces manual, error-prone data extraction with an automated, scalable infrastructure.

This process ensures that clean, consistent, and timely data is delivered to a central destination, typically a cloud data warehouse like Snowflake, Databricks, or Google BigQuery. The result is a unified data asset that serves as the analytical backbone of the organization.

Why Data Integration Is a Core Business Function

What was once a back-office IT task is now a strategic business imperative. Lacking a coherent data integration strategy, key initiatives—from predictive analytics to AI-driven personalization—fail. This isn’t a temporary trend; it reflects a fundamental shift in how businesses operate.

The global data integration market is projected to grow from USD 17.58 billion in 2026 to USD 33.24 billion by 2030, a surge driven by the complexities of managing data in multi-cloud and hybrid environments. For a detailed breakdown of these drivers, see recent industry analysis.

This growth underscores a core principle: competitive advantage is now directly tied to the ability to make decisions based on complete, accurate data. Effective cloud data integration is the mechanism that delivers this capability.

An organization operating on siloed data is incoherent. Cloud data integration imposes order, creating the synchronized data foundation required for meaningful analysis and insight generation.

A successful implementation delivers tangible outcomes:

- Unified Decision-Making: Executive dashboards reflect a comprehensive view of the entire business, not fragmented departmental perspectives.

- AI and Machine Learning Enablement: AI models require large volumes of high-quality, consolidated data. Integration provides the continuous data supply chain to train and run these models effectively.

- Enhanced Operational Efficiency: Automated data flows eliminate manual data reconciliation, redirecting engineering resources from low-value data wrangling to high-value analysis and innovation.

Choosing Your Integration Architecture

Selecting an appropriate integration architecture is a foundational decision with long-term consequences for scalability, cost, and efficiency. The choice depends on data sources, cloud infrastructure, and the required latency for business insights. An incorrect architectural choice leads to inefficient data pipelines, technical debt, and budget overruns.

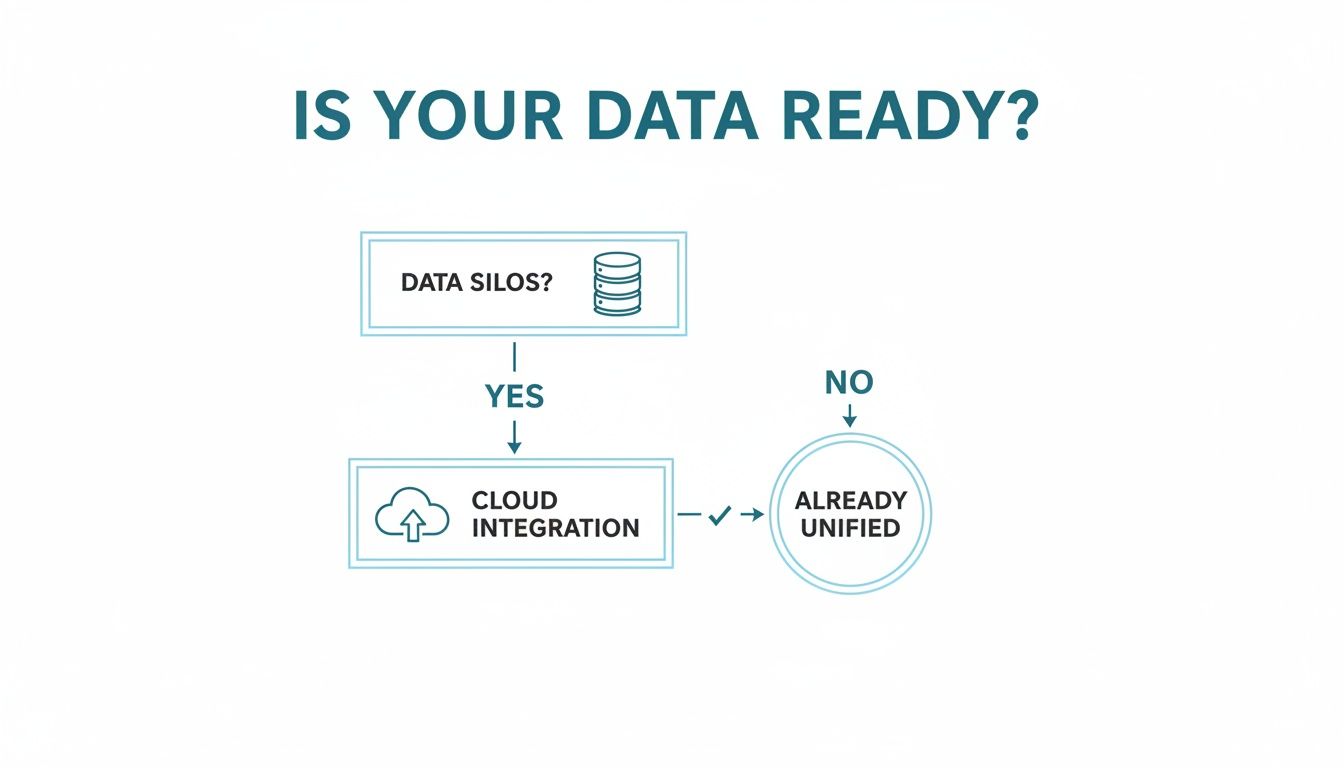

This initial decision often starts with a simple assessment: is data fragmented across systems or largely centralized?

For nearly every enterprise, data is fragmented. Cloud data integration is therefore the critical prerequisite for achieving a unified analytical view. The three primary architectural patterns to achieve this are ETL, ELT, and CDC.

ETL: The Traditional Staging-First Approach

ETL (Extract, Transform, Load) is the legacy pattern for data integration. It operates by performing transformations on a separate, dedicated staging server before loading the data into the destination.

- Extract: Raw data is pulled from source systems (CRMs, ERPs, databases).

- Transform: On a dedicated processing engine outside the data warehouse, the data is cleaned, standardized, aggregated, and structured.

- Load: The processed, analysis-ready data is loaded into the cloud data warehouse.

ETL originated in an era of limited data warehouse processing power and high storage costs. By transforming data before loading, it minimized the resource burden on the destination warehouse. This pattern remains relevant for use cases with highly structured data or strict compliance requirements that mandate data masking before it enters the central repository.

ELT: The Modern In-Warehouse Approach

ELT (Extract, Load, Transform) is the dominant modern pattern, enabled by the elastic compute and storage of cloud data warehouses. It defers the transformation step until after the data has been loaded.

- Extract: Raw data is pulled from sources.

- Load: The raw, unprocessed data is immediately loaded into the cloud data warehouse.

- Transform: All data cleaning, joining, and modeling occur inside the warehouse, leveraging its massively parallel processing capabilities.

This pattern takes full advantage of the scalable engines in platforms like Snowflake or Google BigQuery. It provides significant flexibility, allowing raw data to be stored first and then transformed for multiple, diverse business use cases as needed. ELT is the standard for most cloud data integration projects today, particularly those involving large volumes of semi-structured data from modern applications.

ELT inverts the traditional model. It shifts the paradigm from “prepare then deliver” to “deliver raw, prepare on demand,” utilizing the destination system’s power to provide greater speed and flexibility for data teams.

CDC: The Real-Time Replication Engine

Change Data Capture (CDC) is a technique focused on data replication efficiency. Instead of copying entire datasets on a schedule, CDC identifies and captures only the incremental changes—updates, insertions, and deletions—in source databases as they occur, typically by reading database transaction logs.

Consider a large ERP system with 10 million customer records. A full daily reload is inefficient and places a heavy load on the source system. With CDC, after the initial load, the pipeline only transmits the 10,000 records that changed that day, not the entire 10 million.

CDC is critical for use cases requiring low-latency data, such as:

- Real-time operational dashboards

- Fraud detection systems

- Maintaining synchronization between operational and analytical databases

This method dramatically reduces the load on source systems and minimizes network traffic, making it a highly efficient pattern for keeping a cloud data warehouse current.

ETL vs ELT vs CDC At a Glance

This table provides a direct comparison of the primary architectural patterns.

| Attribute | ETL (Extract, Transform, Load) | ELT (Extract, Load, Transform) | CDC (Change Data Capture) |

|---|---|---|---|

| Data Transformation | Occurs on a separate staging server before loading. | Happens directly within the cloud data warehouse after loading. | Captures only changed data; transformation can follow ETL or ELT logic. |

| Data State in Warehouse | Only clean, structured, and transformed data is stored. | Raw, unprocessed data is stored alongside transformed data. | Warehouse data is kept in near real-time sync with the source system. |

| Ideal Use Case | Structured data, compliance-heavy industries, on-premise warehouses. | Big data, semi-structured data, fast-paced analytics, data exploration. | Real-time analytics, database replication, minimizing source system load. |

| Flexibility | Lower. Transformation logic is fixed before loading. | Higher. Raw data is available for multiple, future transformations. | Highest for data freshness. It’s about what you move, not how you transform it. |

| Speed to Insight | Slower, as transformation is a bottleneck upfront. | Faster, as raw data is available for querying almost immediately. | Near-instantaneous for use cases dependent on the latest data. |

Selecting the right pattern, or more often a hybrid approach, is a foundational architectural decision. A deeper dive into these concepts can be found in established data integration best practices.

The Payoff and the Pitfalls: Weighing Benefits Against Risks

Cloud data integration is a strategic business decision, not merely a technical implementation. A successful project can create a significant competitive advantage by transforming data into a valuable, accessible asset. However, overlooking the inherent risks can lead to costly failures that undermine the project’s goals. A pragmatic approach requires a clear understanding of both the benefits and the potential pitfalls.

The Business Case for Integration

A well-architected cloud data integration strategy delivers quantifiable business value.

- Fueling AI and ML Ambitions: Effective machine learning models depend on a continuous supply of clean, consolidated data. A robust integration strategy provides this essential fuel for projects ranging from predictive analytics to generative AI.

- Sharpening Your Analytics: Creating a single source of truth eliminates the data silos and conflicting reports that hinder effective decision-making. Business intelligence teams often see their time-to-insight reduced by over 30%, leading to faster, more reliable analytics.

- Boosting Operational Efficiency: Modern integration is fundamentally about automation. It replaces brittle, manual processes with resilient, automated data pipelines, freeing engineers to focus on high-value innovation rather than maintenance.

The primary value of cloud data integration is the compression of time between a business event and an informed decision based on that event. It converts data from a historical record into a real-time strategic asset.

Navigating the Hidden Dangers

While the benefits are significant, the path to successful integration is fraught with risks that carry financial and strategic consequences.

The modern data landscape is inherently complex. The average enterprise now uses over 300 SaaS applications, creating a web of data silos. While integration platforms are designed to solve this, the process introduces new challenges. The current state of enterprise data integration adoption highlights the scale of this complexity.

The most common risks include cost overruns, compliance failures, and vendor lock-in.

Runaway Cloud Costs

The pay-as-you-go cloud model offers flexibility but can lead to uncontrolled spending if pipelines are inefficient. A poorly configured job processing excessive data or running too frequently can quickly exhaust a cloud budget.

Primary drivers of cost overruns:

- Inefficient Data Movement: High egress fees can result from moving large data volumes between cloud regions or from on-premise systems.

- Redundant Processing: Rerunning transformations on entire datasets instead of just incremental changes consumes expensive compute resources.

- Idle Resources: Over-provisioning compute clusters that remain idle for long periods wastes money.

Compliance and Governance Failures

Moving data across multiple systems and cloud environments introduces significant compliance risks. Without robust governance, sensitive data like Personally Identifiable Information (PII) can be exposed, leading to violations of regulations like GDPR or CCPA. Such failures can result in substantial fines and reputational damage.

The Strategic Peril of Vendor Lock-In

Selecting a cloud data integration platform is a long-term commitment. Many proprietary tools utilize unique connectors, transformation languages, and closed architectures that make it difficult and expensive to migrate to another vendor. This vendor lock-in can stifle innovation, limit the adoption of better technologies, and create dependency on a single vendor’s pricing and product roadmap. A superior strategy involves choosing platforms built on open standards that ensure interoperability.

Implementing Security And Governance That Works

Security and governance are frequently treated as afterthoughts in data projects, a practice that is untenable in cloud environments. Effective cloud data integration requires that security be designed into the architecture from the outset, not applied retroactively. This means moving beyond basic encryption and embedding security controls directly into data pipelines. When implemented correctly, governance becomes an automated, integrated function rather than a manual bottleneck.

Go Beyond Basic Access Controls

Effective governance extends beyond controlling access to the final data warehouse. It requires granular control within the integration platform itself. Role-Based Access Control (RBAC) should be applied at the integration layer to manage permissions for creating, modifying, and executing data pipelines.

A mature security model shifts from asking ‘Who can access the warehouse?’ to ‘Who has permissions to build, modify, or view the pipelines that feed it?’ This proactive stance prevents unauthorized data movement at its source.

Implementing RBAC within the integration tool allows for the creation of specific, granular rules:

- Data Engineers can be granted full permissions for specific data domains (e.g., marketing pipelines) while being denied access to sensitive financial data pipelines.

- BI Analysts can be given read-only access to pipeline logs and metadata for troubleshooting purposes, without the ability to alter pipeline logic.

- Auditors can receive temporary, view-only access to compliance reports and data lineage maps, fulfilling regulatory requirements without exposing configuration settings.

This approach enforces the principle of least privilege, minimizing the risk of accidental data exposure or malicious activity.

Proactive Data Protection During Transit

Data must be protected not only at rest and in transit but also during processing. Data pipelines often use temporary staging areas where sensitive information like Personally Identifiable Information (PII) can be exposed.

Data masking is a critical control for mitigating this risk. Masking techniques should be applied during the transformation stage to de-sensitize data before it moves through the pipeline.

- Redaction: Replaces sensitive values with fixed characters (e.g., a Social Security Number becomes

XXX-XX-XXXX). - Substitution: Replaces real values with consistent but fictitious identifiers, allowing for behavioral analysis without exposing personal identity.

- Shuffling: Randomizes values within a column, preserving the statistical properties of the dataset while breaking the link to individual records.

By masking data mid-flight, you render it useless to unauthorized parties who might gain access to staging environments. This is a crucial defense for complying with privacy regulations like GDPR and CCPA.

Maintain Clear Data Lineage for Compliance

For auditing and compliance purposes, organizations must be able to demonstrate the complete lifecycle of their data. Data lineage provides this detailed audit trail, tracking data from its source through all transformations to its final destination.

Modern cloud integration platforms can automatically generate and visualize data lineage, creating a clear map of every data flow. This capability is invaluable for debugging pipeline failures and assessing the impact of proposed changes. For compliance, it provides verifiable proof of how data is handled, enabling organizations to respond quickly and accurately to regulatory inquiries. Understanding the range of cloud data security challenges is the first step toward building a framework capable of delivering this level of visibility.

How to Evaluate and Select the Right Partner

Choosing an implementation partner for a cloud data integration project is a critical decision. The right partner provides strategic guidance, technical expertise, and helps avoid costly mistakes. The wrong partner can lead to budget overruns, significant technical debt, and project failure. This decision is not merely about procuring a tool but about securing proven expertise.

The market for these services is expanding rapidly. In the U.S. alone, the data integration services market generated USD 7,143.6 million in 2024 and is projected to reach USD 12,113.8 million by 2030. This growth reflects a market-wide recognition that specialized consultancies offer a more effective path to success than attempting complex integrations solely in-house. Additional details on the US data integration market growth are available in recent reports.

Go Beyond Surface-Level Certifications

Certifications from platforms like Snowflake or Databricks are a baseline qualification, not a guarantee of expertise. Any consultancy can acquire certifications. The goal is to differentiate between theoretical knowledge and practical, real-world experience.

Move beyond standard questions about successful projects and probe for how they handle adversity.

A partner’s competence is best revealed not by their successes, but by how they analyze their failures. Ask for an anonymized account of a project that faced significant challenges or failed, and specifically what technical and procedural lessons were learned from the experience.

This question cuts through sales rhetoric. A mature firm will provide a thoughtful analysis that demonstrates humility and a commitment to process improvement. An inexperienced firm will likely become defensive or claim to have never had a project fail—a significant red flag.

The Essential RFP Checklist

A well-structured Request for Proposal (RFP) is essential for a standardized, objective comparison of potential partners. Your RFP should compel vendors to provide specific, evidence-based answers rather than generic marketing material.

Ensure your RFP demands concrete details on:

- Industry-Specific Experience: Require case studies relevant to your industry. A firm with experience navigating healthcare compliance is better suited for a hospital system than one focused exclusively on retail.

- Team Composition and Experience: Request anonymized profiles of the proposed project team. Look for a balanced team of senior architects and experienced engineers, not a team dominated by junior staff.

- Technology Stack Agnosticism: Assess whether the partner is objective. A consultancy that exclusively recommends one tool may be influenced by sales incentives rather than your specific needs.

- Proposed Project Governance: Demand a clear, documented methodology for managing communication, project timelines, and scope changes. A well-defined process indicates discipline and professionalism.

A rigorous RFP process is a critical investment that systematically identifies and disqualifies unsuitable partners. For further guidance, review established criteria for selecting data engineering consulting services.

Vendor And Consultancy RFP Red Flags

During the evaluation process, be vigilant for common warning signs that may indicate a lack of experience, transparency, or a poor organizational fit.

| Red Flag Category | Specific Warning Sign | Why It Matters |

|---|---|---|

| Pricing and Contracts | Vague, bundled pricing models without clear line items. | This obscures the true cost of services and can hide low-value offerings. A detailed cost breakdown is non-negotiable. |

| Technical Depth | Over-reliance on a single technology stack or vendor. | A true partner recommends the optimal tool for the problem, not just the tool they know best. This indicates a lack of versatility. |

| Team and Expertise | A “bait-and-switch” with the project team. | The senior experts presented during the sales process are replaced by a junior team post-contract. Insist on meeting the core delivery team. |

| Communication | Evasive answers to tough questions, especially about failures. | A lack of transparency during the sales cycle is a strong predictor of behavior when project challenges inevitably arise. |

Identifying these red flags is as important as analyzing the technical components of a proposal.

Cloud Data Integration FAQs: Your Questions, Answered

Practical questions and concerns inevitably arise during the planning and execution of cloud data integration projects. Here are answers to some of the most common inquiries.

What Is a Realistic Timeline for Our First Integration Project?

A simple point-to-point data connection can be established in days, but a foundational project—such as integrating a core ERP system with a cloud data warehouse—requires a more realistic timeline of three to six months. This duration is necessary to accommodate critical, non-negotiable phases.

A typical project timeline includes:

- Discovery and Scoping (2-4 weeks): In-depth analysis of source data quality, business logic, and stakeholder requirements to define a clear project scope.

- Architecture Design (2-3 weeks): Selection of integration patterns (ETL, ELT, CDC) and design of scalable, resilient data pipelines.

- Development and Testing (6-12 weeks): Implementation of pipelines, transformation logic, and rigorous data validation and performance testing.

- Deployment and UAT (2-4 weeks): Go-live deployment followed by User Acceptance Testing, where business stakeholders validate the data and outputs.

Attempting to accelerate these phases often results in technical debt, which is far more costly to remedy later. A six-month payback period is a reasonable target for a well-executed project, emphasizing the importance of correct implementation over speed.

Can We Handle Cloud Data Integration In-House?

Building an in-house team is feasible but challenging due to a significant talent shortage. Experienced data engineers with expertise across multiple cloud platforms and integration tools are difficult to recruit and retain. According to industry reports, 90% of organizations struggle with a critical lack of data talent.

An in-house approach is most viable for large enterprises with substantial budgets and mature IT organizations. For most other companies, a hybrid model or engaging an expert consultancy is a more pragmatic and efficient strategy.

Outsourcing is a strategic decision to accelerate time-to-value, not a sign of internal weakness. Expert partners provide proven frameworks and cross-industry experience, enabling organizations to avoid common pitfalls that can delay internal teams for months.

A common and effective strategy is to use a partner for the initial architectural design and implementation while upskilling the internal team to manage long-term maintenance and operations.

How Do We Choose Between a Platform and Custom Code?

This is the classic “build vs. buy” dilemma. While custom scripts (e.g., in Python) may seem adequate for simple, isolated tasks, a dedicated integration platform is almost always the superior long-term solution for enterprise needs. The decision should be based on a total cost of ownership (TCO) analysis.

- Custom Code: Requires specialized developers for initial creation and ongoing maintenance. It typically lacks built-in governance, monitoring, and error handling. Scaling becomes difficult as each new data source requires custom development, leading to a brittle and poorly documented system.

- Integration Platform: Provides pre-built connectors, automated security and governance features, visual development interfaces, and out-of-the-box monitoring and alerting. While the initial license cost is higher, it significantly reduces long-term operational overhead.

The market has largely shifted away from custom-coded solutions. Organizations that rely on custom scripts often find that 80% of their data engineering time is consumed by maintenance, leaving little capacity for innovation.

What Are the Most Common Hidden Costs?

Standard budgets typically account for software licensing and cloud compute costs, but several other expenses frequently arise unexpectedly. Proactive planning for these costs is essential to avoid budget overruns.

- Data Egress Fees: Cloud providers charge for moving data out of their networks or between different geographic regions. An inefficiently designed pipeline that frequently transfers large datasets can incur substantial egress fees.

- Rework from Poor Data Quality: Neglecting to profile and clean data at the source leads to downstream consequences. Broken pipelines, inaccurate analytics, and wasted engineering time are the direct costs of poor initial data quality assessment.

- Ongoing Maintenance and Optimization: Data integration systems are not static. Source system APIs change, business requirements evolve, and data volumes increase. A sound practice is to budget an additional 15-20% of the initial project cost annually for ongoing maintenance, optimization, and enhancements.

Anticipating these second-order costs is critical for ensuring a project remains on budget and delivers its expected return on investment.

Navigating the complexities of cloud data integration successfully often depends on selecting the right implementation partner. DataEngineeringCompanies.com provides independent, data-driven rankings and resources to assist in vetting and choosing the best consultancy for your project. Review our 2026 expert rankings to identify a partner with proven experience in your industry and technology stack.

Top Data Engineering Partners

Vetted experts who can help you implement what you just read.

Related Analysis

10 Data Integration Best Practices for 2026's Revenue Engine

Master our 10 data integration best practices for 2026. Drive revenue with actionable insights on ELT, data contracts, AI, and hybrid architectures.

A Practical Guide to Creating a Data Warehouse That Delivers Business Value

A practical blueprint for creating data warehouse architecture, pipelines, modeling, and governance to drive insight.

What Is Data Fabric? A Practical Guide to Modern Data Architecture

Confused about what is data fabric? This guide explains its architecture, compares it to data mesh, and shows how it solves today's complex data challenges.