Cloud Data Security Challenges: Practical Tactics for Safer Cloud Data

Cloud data security is a business risk, not an IT problem. Data breaches, misconfigurations, and compliance failures are primary concerns for executives as organizations migrate to the cloud. Protecting digital assets and maintaining customer trust requires confronting these issues directly.

Why Cloud Data Security Is Now a Boardroom Issue

Data security has moved from the server room to the boardroom because migrating to the cloud fundamentally changes a company’s risk profile. An on-premise data center operates like a single, guarded vault with a clear perimeter and controlled access. In contrast, a modern multi-cloud environment is like a global network of ATMs.

Each endpoint in this distributed model is accessible from anywhere, presenting unique vulnerabilities. While this structure drives business agility, it also dramatically expands the potential attack surface.

A Snapshot of Top Cloud Data Security Threats

To understand the current threat landscape, it is useful to categorize common threats. These are the persistent issues data leaders and security teams address in cloud environments.

| Challenge Category | Common Root Cause | Primary Business Impact |

|---|---|---|

| Data Breaches | Weak credentials, phishing, or exploiting unpatched software. | Financial loss, regulatory fines, and severe reputational damage. |

| Misconfigurations | Human error, lack of oversight, or complex cloud settings. | Unintentional data exposure, unauthorized access, and compliance violations. |

| Identity & Access | Overly permissive roles, poor credential management, no MFA. | Escalated privileges for attackers, leading to widespread system compromise. |

| Insecure Pipelines | Vulnerabilities in code, open-source libraries, or CI/CD tools. | Malicious code injection, data tampering, or compromised applications. |

| Third-Party Risks | Inadequate vendor security, compromised SaaS tools, or supply chain attacks. | Data exfiltration through a trusted partner, loss of intellectual property. |

| Compliance Gaps | Misunderstanding shared responsibility, poor data governance. | Hefty fines (GDPR, CCPA), loss of certifications, and legal battles. |

Each challenge represents a distinct attack vector, but all of them put critical business data at risk. Understanding these root causes is the first step toward building an effective defense.

The Innovation vs. Security Clash

A natural tension exists between the business demand for speed and AI-driven innovation and the organizational need for robust security and compliance. These two priorities often seem to be in direct opposition.

The pressure to accelerate delivery can lead teams to bypass security protocols, creating vulnerabilities that remain dormant until discovered by an attacker. This is why a thorough cloud migration assessment checklist is critical—it forces a systematic mapping of risks before they become active threats.

The modern executive’s dilemma is balancing the immense potential of cloud data with the severe financial and reputational costs of a breach. Ignoring cloud data security is no longer an option; it’s a direct threat to business continuity.

The Staggering Financial and Reputational Costs

The consequences of inadequate security are significant. In 2023, 82% of all data breaches involved data stored in the cloud, a statistic that highlights where vulnerabilities lie. Looking ahead to 2025, SentinelOne reports that cloud breaches have surpassed on-premise incidents, with weekly cyberattacks having already jumped 47% year-over-year.

A single breach can trigger millions in regulatory fines, erode customer trust, and inflict long-term damage on a brand.

Data leaders and executives must champion a security-first culture. Protection cannot be an afterthought; it must be integrated into the cloud strategy from the outset. This proactive stance is the only effective way to manage today’s complex cloud data security challenges.

The Six Critical Flaws in Your Cloud Security Armor

Attackers often exploit simple, preventable errors rather than exotic zero-day exploits. By breaking down the threat landscape into six core areas, we can identify and address real-world system weaknesses.

1. Pervasive Misconfigurations

The most common and damaging vulnerability in cloud security is misconfiguration. This is the digital equivalent of leaving a ground-floor window unlocked—an open invitation for unauthorized access.

In the cloud, this manifests as an Amazon S3 bucket set to “public” or a database accepting connections from any IP address. These are simple setup errors, yet they are responsible for exposing vast amounts of sensitive data. Gartner predicts that through 2025, 99% of cloud security failures will be the customer’s fault, primarily from such mistakes. With 45% of cloud data breaches tracing back to misconfigurations, human error remains the largest single threat vector.

2. Identity and Access Mismanagement

Poor identity and access management is like distributing master keys without restriction. It involves controlling who can access what data and with what level of authority. In complex cloud environments, it is easy to grant overly broad permissions.

A common example is giving a developer full administrative access to a production database to expedite a task. This creates a significant security hole. If that developer’s credentials are compromised, an attacker gains control over the entire data system.

The goal should always be the Principle of Least Privilege: Give every user and every service the absolute minimum access they need to do their job. Anything more is a risk you don’t need to take.

3. Insecure Development Pipelines

A CI/CD (Continuous Integration/Continuous Delivery) pipeline is the automated factory that builds and deploys software. If flawed components are introduced into this factory, every resulting application will have built-in vulnerabilities.

Common pipeline security issues include:

- Vulnerable Dependencies: Using open-source libraries with known, unpatched security flaws.

- Exposed Secrets: Hardcoding API keys, passwords, and other credentials directly into source code.

- Lack of Scanning: Pushing code and container images into production without first scanning them for security issues.

An insecure pipeline systematically introduces vulnerabilities across the entire software supply chain, making remediation a large-scale, retroactive effort.

4. Direct Data Breaches and Exfiltration

This challenge involves active attempts to infiltrate systems and steal data. Attackers use established techniques like SQL injection, targeted phishing campaigns to harvest credentials, or exploitation of known software vulnerabilities to gain an initial foothold.

Once inside, their objective is to locate and exfiltrate valuable assets—customer PII, financial reports, intellectual property—without detection. A major breach can start with a single phishing email that provides low-level access, which an attacker can then use to move laterally across the network.

5. Third-Party and Supply Chain Risks

An organization’s security perimeter extends to every SaaS provider, contractor, and third-party tool integrated into its stack. A vulnerability in a vendor’s system can become a direct vulnerability for the organization.

This is the nature of modern supply chain risk. For instance, granting a third-party analytics tool access to a data warehouse means a breach at that vendor could expose your data. Vetting the security practices of partners is as important as managing internal security.

6. Regulatory and Compliance Gaps

Failing to meet regulatory standards like GDPR, HIPAA, or CCPA is a security challenge. The borderless nature of the cloud makes it easy to inadvertently violate these regulations, such as storing European customer data in a US-based data center without the proper legal frameworks.

These gaps often arise from a misunderstanding of the Shared Responsibility Model. While the cloud provider (AWS or Azure) secures the cloud infrastructure, the customer is always responsible for securing their data in the cloud. Assuming the provider handles all aspects of security can lead to audits, fines, and reputational damage.

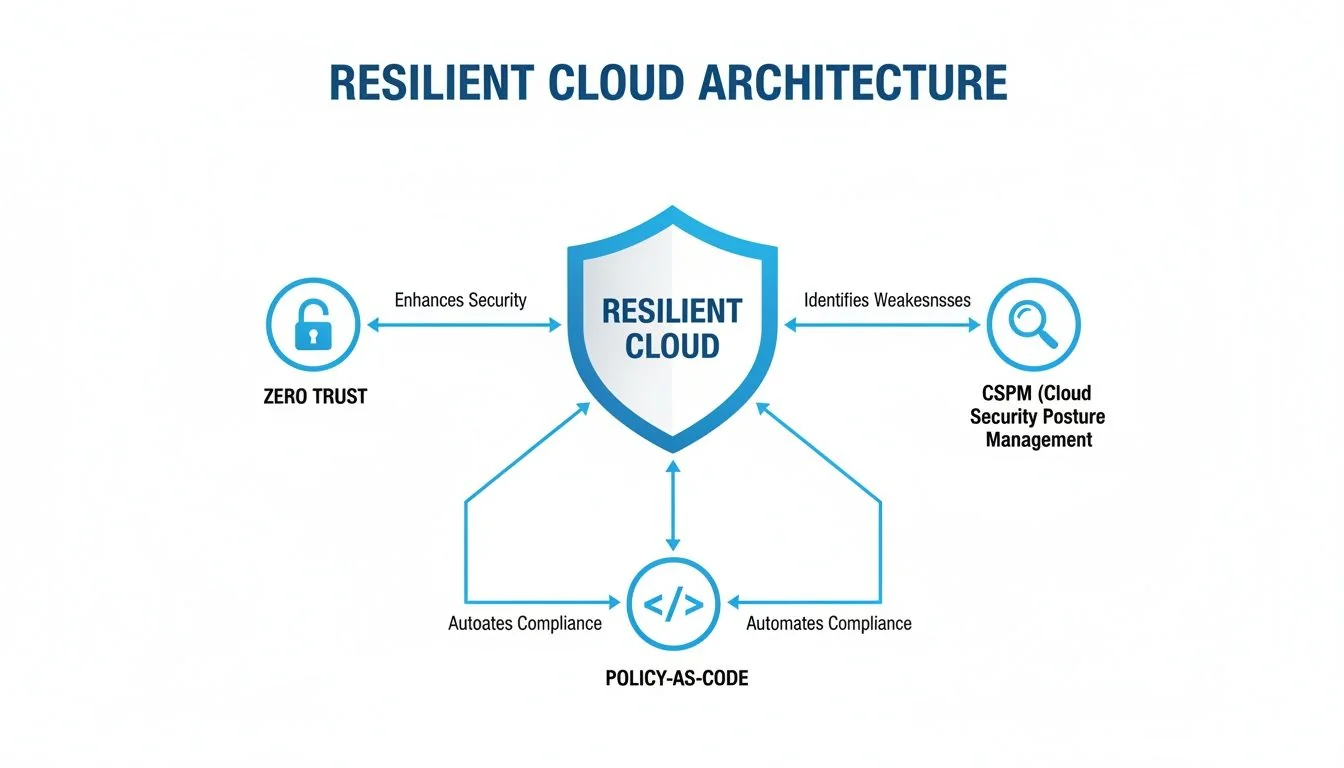

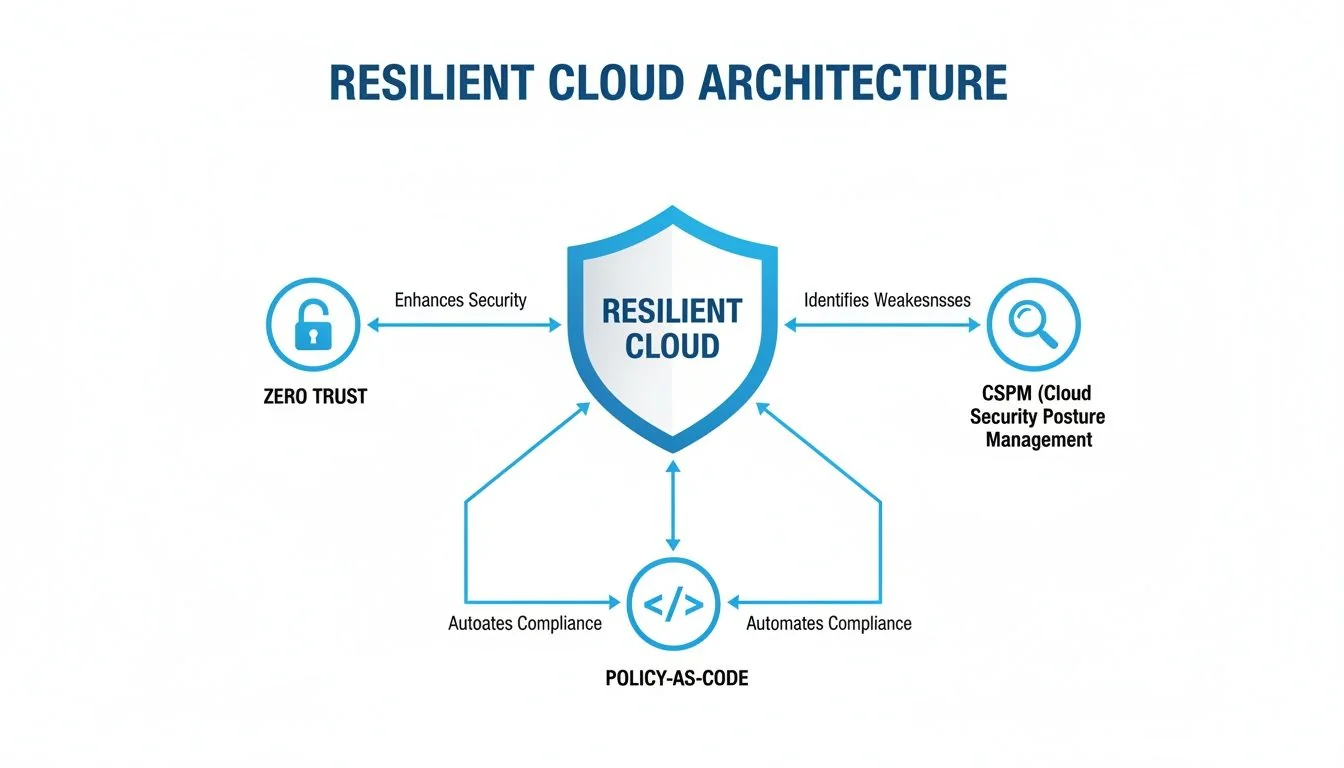

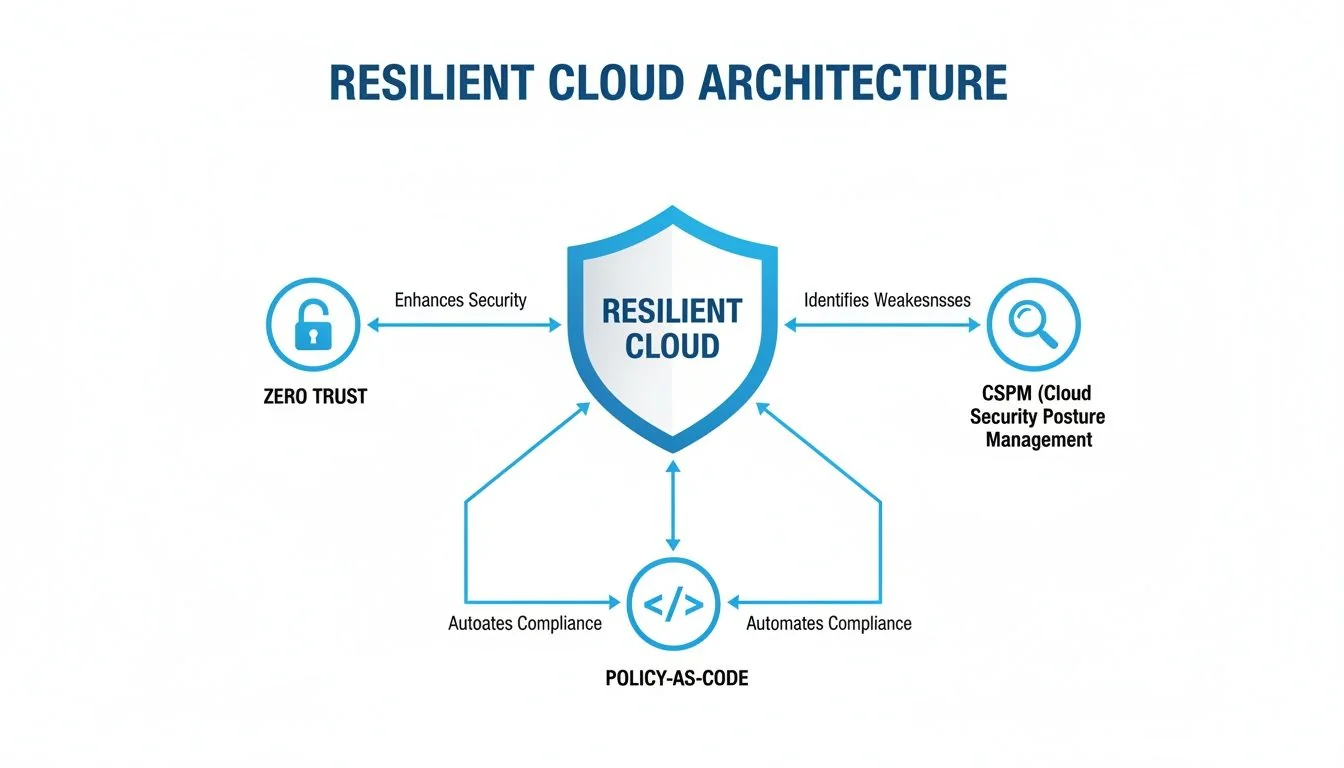

Building a Resilient Cloud Data Architecture

Identifying vulnerabilities is only the first step. Building an effective defense requires designing an architecture that is secure by design, not just patched reactively.

A resilient architecture is built on the assumption that attacks will occur. It is designed to contain damage, prevent lateral movement, and provide the visibility needed for rapid response. This proactive mindset is what separates a minor incident from a major breach.

Adopting a Zero Trust Mindset

The foundation of a modern, secure data architecture is Zero Trust. The outdated “trust but verify” model is replaced by “never trust, always verify.” Every user, device, and application is treated as a potential threat, regardless of its location.

In a data context, this means:

- Micro-segmentation: Creating tight, isolated security perimeters around individual data assets. A compromise in a development environment cannot easily spread to the production data warehouse.

- Granular Access Control: Granting access on a per-request basis with the minimum privileges required for a specific task, for only the necessary duration.

- Continuous Verification: Constantly re-evaluating authentication. If a user’s behavior becomes anomalous or their device shows signs of compromise, access is revoked instantly.

A Zero Trust architecture fundamentally changes the security game. It moves the defense from a brittle, easy-to-crack perimeter to a flexible, resilient defense grid woven directly around your most critical data assets.

Leveraging Modern Security Tooling

Building a secure architecture at scale requires sophisticated, automated tools. Two categories are essential for managing cloud data security.

Cloud Security Posture Management (CSPM) tools act as automated security auditors. They continuously scan cloud environments against security benchmarks, flagging misconfigurations in real-time, such as a publicly exposed S3 bucket or a weakened firewall rule.

Cloud-Native Application Protection Platforms (CNAPP) offer an integrated approach, combining CSPM capabilities with other security functions like application and workload protection.

This diagram shows how CNAPP solutions bring together multiple security and compliance tools into a single, cohesive platform.

By consolidating these functions, CNAPPs provide a comprehensive view of risk, from infrastructure to application code, simplifying security operations.

Automating Security With Policy-as-Code

To prevent security from becoming a bottleneck, enforcement must be automated. Policy-as-Code (PaC) allows security and compliance rules to be defined in code, making them understandable to both humans and machines.

These policies are integrated directly into the CI/CD pipeline. For example, a policy could automatically block any infrastructure deployment that does not have encryption enabled. This “shift left” strategy identifies and resolves issues before they reach production. A well-defined policy is a core component of any effective security program, and you can learn more about building one with our data governance framework template.

Designing for a Secure Data Mesh

As organizations adopt decentralized architectures like the data mesh, security models must adapt. The data mesh philosophy treats data as a product, with domain teams owning their own data pipelines and services. Without proper management, this can lead to security fragmentation.

A secure data mesh architecture balances agility and control through:

- Centralized Governance: A central security team establishes global rules, policies, and standards for all domain teams.

- Federated Enforcement: Individual domain teams are responsible for implementing and enforcing these security controls within their data products.

- Automated Tooling: The central team provides self-service security tools, such as pre-configured access templates and vulnerability scanners, to help domain teams comply with standards.

This federated model allows teams to innovate quickly without compromising the organization’s security posture. By combining these architectural patterns—Zero Trust, modern tooling, automation, and smart design—you can build a data platform that is both powerful and resilient.

A Practical Security Comparison of Snowflake and Databricks

The choice of data platform is a foundational security decision. The two leading platforms, Snowflake and Databricks, offer robust security features but have different approaches to governance and control. Aligning the platform’s native capabilities with your organization’s security requirements is crucial.

This diagram shows a conceptual model for a resilient cloud architecture, built on principles like Zero Trust, continuous monitoring with CSPM, and automated enforcement using Policy-as-Code.

A strong security posture is achieved by layering multiple, reinforcing controls that work in concert.

Snowflake’s Approach to Security and Governance

Snowflake’s architecture is built on a structured, centralized security model, which is well-suited for traditional enterprise data warehousing and organizations with strict compliance requirements.

The core of Snowflake’s security is its Role-Based Access Control (RBAC) system. This allows for the creation of a precise hierarchy of roles and privileges, ensuring users only access data they are explicitly authorized to see.

Snowflake also includes advanced, native features:

- Dynamic Data Masking: This feature redacts sensitive data in a column based on user roles. For example, a support agent might see a customer’s email as

[email protected], while an analyst sees the full address. The underlying data remains unchanged. - Row-Level Security: Policies can be created to filter which rows a user can see in a table, a critical feature for multi-tenant analytics applications.

- End-to-End Encryption: Snowflake automatically encrypts all data at rest and in transit, with options for customer-managed keys.

This centralized, policy-driven approach simplifies the application of consistent governance rules across the platform.

Databricks and the Unity Catalog

Databricks, originating from the open-source Apache Spark project, offers a more flexible, developer-centric approach. Its governance is unified through the Unity Catalog, which serves as a central governance layer for all data and AI assets, including tables, files, and machine learning models.

The Databricks model is a federated system, empowering individual teams to manage their own data assets within global governance standards set by a central authority. This model is effective for organizations with decentralized data teams and complex AI/ML pipelines.

Key security features in Databricks include:

- Fine-Grained Access Control: Unity Catalog allows permissions to be set on catalogs, schemas, tables, and views using standard SQL commands.

- Secure Cluster Management: Databricks provides strong controls for configuring and isolating compute clusters, preventing interference between workloads and limiting the blast radius of a potential compromise.

- Attribute-Based Access Control (ABAC): This emerging capability enables dynamic security policies based on user attributes like department, project, or location, rather than static roles alone.

Comparing Native Security and Governance Features

This table provides a side-by-side comparison of the core security and governance features of each platform.

| Security Feature | Snowflake Implementation | Databricks Implementation | Key Takeaway |

|---|---|---|---|

| Primary Access Control | Role-Based Access Control (RBAC) is deeply integrated into the architecture. | Unity Catalog provides a unified governance layer with a mix of RBAC and ABAC. | Snowflake is role-centric and hierarchical; Databricks is more flexible and asset-centric. |

| Data Masking | Dynamic Data Masking is a native, policy-driven feature applied at query time. | Implemented via custom functions or views; less of a built-in, declarative feature. | Snowflake has a clear advantage for out-of-the-box, policy-based data masking. |

| Row-Level Security | Row Access Policies are a mature, first-class security feature. | Implemented through Row Filters and Column Masks within Unity Catalog. | Both platforms offer robust solutions, but Snowflake’s is arguably more established. |

| Data Encryption | End-to-end encryption is automatic, with customer-managed key options. | Provides similar end-to-end encryption with customer-managed key capabilities. | Both platforms meet enterprise standards for data encryption at rest and in transit. |

| Central Governance | The entire model is centralized, promoting a single source of truth for governance. | Unity Catalog centralizes governance across disparate workspaces and clouds. | Snowflake’s is centralized by design; Databricks created Unity Catalog to unify a more federated ecosystem. |

| Audit & Lineage | Provides extensive audit logs and Object Tagging for tracking and classification. | Unity Catalog captures detailed audit logs and provides automated data lineage tracking. | Databricks has a slight edge with its built-in, visual data lineage capabilities. |

Both platforms provide the necessary tools to build a secure data environment. The best choice depends on the operational model and feature set that best aligns with your team and data strategy.

Making the Right Choice for Your Needs

Neither platform is inherently “more secure.” They are optimized for different operational models and project types. The decision often depends on organizational culture and technical requirements.

If your main goal is to run a highly governed, centralized corporate data warehouse with a strict separation of duties, Snowflake’s unified security model offers simplicity and powerful, ready-to-use controls. But if your focus is on empowering decentralized teams to build advanced analytics and AI/ML products, Databricks’ flexible, catalog-driven governance provides the adaptability you’ll need.

For a deeper look at how these platforms compare on features beyond just security, check out our complete Snowflake vs Databricks comparison to get the full picture. The best platform is the one whose security philosophy best matches your own.

The Essential RFP Checklist for Data Engineering Partners

Selecting the right data engineering partner is a critical security decision. You are granting an external team deep access to your cloud environment and sensitive data. Their security competence is non-negotiable.

A polished sales presentation is not a substitute for expertise. Your Request for Proposal (RFP) must function as a rigorous security audit, with precise questions designed to reveal a partner’s ability to navigate modern cloud data security challenges.

While 83% of organizations identify cloud security as a top concern, adoption of foundational tools lags, with only 26% using platforms like CSPM. This gap underscores the need to thoroughly vet potential partners on their security stack and practical knowledge. Government agencies share this concern, with 88% citing misconfigurations as their primary security threat.

Foundational Security and Governance Questions

These initial questions establish a baseline for a partner’s security posture. Vague or generic answers are a significant red flag.

- Access Control: Describe your methodology for building and managing a least-privilege access model in a shared Databricks or Snowflake environment.

- Data Encryption: How do you handle encryption keys for data both at rest and in transit? Describe your experience with customer-managed encryption keys (CMEK).

- Compliance Frameworks: Which specific regulations (e.g., GDPR, HIPAA, SOC 2) have you implemented for clients in the cloud?

- Data Masking: Provide a project example where you used dynamic data masking or row-level security to protect sensitive PII.

- Auditing and Logging: What is your standard procedure for configuring audit logs and monitoring for anomalous activity within a client’s cloud data platform?

Technical and Architectural Scrutiny

These questions assess a team’s technical execution capabilities and their ability to build architectures that withstand real-world threats.

A partner who only talks about firewalls and passwords isn’t equipped for modern cloud security. You need a team fluent in Zero Trust, policy-as-code, and automated threat detection to build a truly resilient system.

Look for answers that demonstrate practical experience with modern security patterns.

- Incident Response: In the event of a suspected data breach in our cloud environment, walk us through your team’s step-by-step incident response process.

- Misconfiguration Remediation: Describe a situation where your team identified and remediated a critical cloud security misconfiguration for a client. What tools did you use?

- Secure CI/CD: How do you secure a data engineering CI/CD pipeline? Detail your approach to secrets management, dependency scanning, and static code analysis.

- CSPM and CNAPP Tooling: What is your hands-on experience with CSPM or CNAPP tools? Which specific platforms have you deployed and managed?

- Multi-Cloud Security: What are the unique challenges of maintaining consistent security across a multi-cloud environment (e.g., AWS and Azure)? How do you address them?

Red Flags to Watch For

Throughout the RFP process, remain vigilant for warning signs. A competent partner will welcome detailed questions and provide clear, specific answers.

Key Warning Signs:

- Over-reliance on Cloud Provider Defaults: A statement like, “AWS handles that,” indicates a misunderstanding of the Shared Responsibility Model.

- Lack of Specific Examples: An inability to provide concrete case studies or architectural diagrams from past projects suggests a lack of practical experience.

- Vague Answers on Compliance: A statement such as “we’re familiar with GDPR” is insufficient. They must describe the specific controls they implement.

- No Mention of Automation: Modern security relies on automation. A focus on manual checklists and processes indicates an outdated approach.

Using this checklist transforms your RFP from a vendor-sourcing exercise into a powerful risk management tool, ensuring you select a partner who will protect your data as if it were their own.

Your Cloud Data Security Questions, Answered

Practical questions often arise during the implementation of cloud data security. Here are straightforward answers to common queries from data leaders.

Which Is More Important: Preventing Misconfigurations Or Defending Against External Attacks?

Preventing misconfigurations is the number one priority. While both are critical, industry data consistently shows that internal errors are the primary enablers of external attacks. Gartner famously predicted that through 2025, 99% of cloud security failures will be the customer’s fault.

Securing a cloud environment is like securing a home. You lock the doors and windows (preventing misconfigurations) before installing an alarm system (defending against attacks). A correctly configured environment significantly reduces the attack surface, making external defenses far more effective. For the greatest risk reduction, focus on automated configuration management.

How Does a Multi-Cloud Strategy Complicate Data Security?

A multi-cloud strategy exponentially increases security complexity. Each cloud provider—AWS, Azure, or GCP—has its own security model, identity management system, and service configurations.

This fragmentation creates blind spots and makes enforcing consistent security policies difficult. A secure configuration in AWS might be a vulnerability in Azure. The security team must become proficient in multiple, complex platforms, increasing the likelihood of error.

The real challenge with multi-cloud is losing a single source of truth for security. Without a unified control plane, you’re defending several fortresses at once, each with different blueprints and unique weak points.

Centralized governance tools are critical in this context. They act as a universal translator, providing a single pane of glass to monitor and manage security posture across otherwise disconnected environments.

What Is the Single Biggest Mistake Companies Make in Cloud Data Security?

The most significant mistake is assuming the cloud provider’s security is sufficient. This stems from a misunderstanding of the Shared Responsibility Model.

The provider is responsible for the security of the cloud—the physical data centers, hardware, and core network. The customer is always responsible for security in the cloud.

This includes:

- Correctly configuring all deployed services and applications.

- Managing user identities and access permissions.

- Encrypting sensitive data, both at rest and in transit.

- Securing the code running on the provider’s infrastructure.

This responsibility cannot be outsourced. Overlooking the customer’s side of the model is a primary cause of breaches. A proactive, well-defined security strategy is non-negotiable.

How Can We Secure Our Data Pipelines for AI and ML Initiatives?

As AI and machine learning become integral to business operations, the data pipelines that feed them have become prime targets. Security must be integrated into these pipelines from the start.

An effective security checklist should cover the entire data lifecycle:

- Lock Down Data Access: Apply strict least-privilege controls to the data lakes and warehouses that store training data.

- Encrypt Everything: Encrypt all data in transit as it moves through the pipeline and at rest in any temporary staging areas or feature stores.

- Map Your Data Lineage: Understand the complete history of your data—its origin, transformations, and usage in models. This is crucial for governance and incident response.

- Scan Your Code Constantly: Integrate vulnerability scanning directly into the CI/CD pipeline (DevSecOps). This prevents insecure code or vulnerable third-party libraries from creating backdoors to your data.

Finding the right team to implement these complex security controls is just as critical as the strategy itself. DataEngineeringCompanies.com offers expert-vetted rankings of top data engineering firms, helping you choose a partner with a proven track record in security. Explore our 2025 rankings to find the right fit for your next project.

Data-driven market researcher with 20+ years in market research and 10+ years helping software agencies and IT organizations make evidence-based decisions. Former market research analyst at Aviva Investors and Credit Suisse.

Previously: Aviva Investors · Credit Suisse · Brainhub · 100Signals

Top Data Engineering Partners

Vetted experts who can help you implement what you just read.

Related Analysis

A Practical Guide to Data Management Services

A practical guide to selecting the right data management service. Compare models, understand pricing, and learn key implementation steps to drive ROI.

A Practical Guide to Hiring Data Governance Consultants

Hiring data governance consultants? This guide unpacks their roles, costs, and selection criteria to help you find the right partner for your modern data stack.

Data Governance vs. Data Management: A Practical Comparison

Understand the critical differences in the data governance vs data management debate. Learn how to align strategy and operations for a modern data platform.